Inspired by the recent frank reflection by UNCHR’s amazing Innovation Team on designing metrics for humanitarian innovation, we would like to share lessons we learned, challenges we are addressing and plans we have moving forward to measure the impact of innovation in and catalyzed by UNDP. As a short background: in 2014, UNDP launched its Innovation Facility to unlock innovation for better development results on the country-level and to help transform the organization. The Innovation Facility is comprised of a small core team of nine innovation advisors, with two based in Headquarters and the others operating from Regional Hubs in direct support of Country Offices and external partners. The key actors are the UNDP intrapreneurs and their partners in our programme countries who push the envelope and do development differently.

The Facility provides risk-capital to entrepreneurial UNDP offices, with the kind support of the Government of Denmark. Since 2014, the fund has supported more than 140 initiatives across 87 countries that showed potential for scaling, forging new partnerships, and catalysing new funding for human development. The fund is sector-agnostic and supports initiatives across UNDP’s strategic priorities and as such, across the full spectrum of the Sustainable Development Goals.

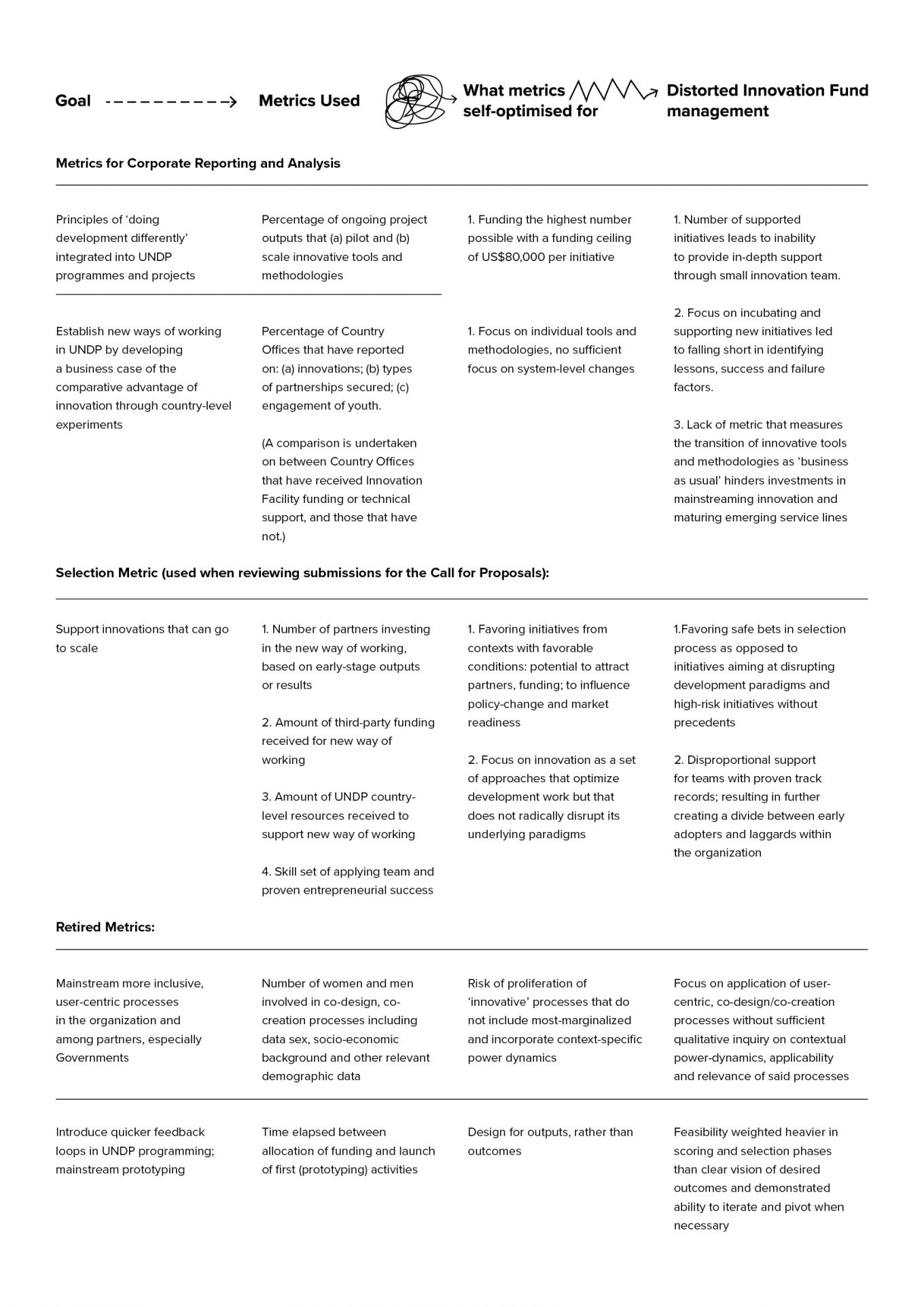

UNDP’s first small innovation fund was launched in 2011 in Eastern Europe and Central Asia. Since then we annually upgrade our modus operandi to reflect our lessons, especially the way we source and score proposals. In a few cases, we also pivoted elements of our measurement framework as we realized that some of the indicators were misaligned to long-term transformative goals. For example, in the early days, one of our goals was to introduce prototyping and quick feedback loops in UNDP’s programming. To accelerate this and to break with the traditional, rather slow-moving corporate processes, we requested proof of disbursements of funds within 8 weeks upon receipt of funds. This led in numerous cases to the design of outputs such as hackathons and other co-design processes that were not sufficiently embedded as milestones in a process to deliver development outcomes. The development world is ripe with pilots and it is increasingly populated with workstreams labeled as prototypes that focus on the novelty of a process or technology, but not a solid vision of the desired development impact.

And so, in 2015 we changed our modus operandi and dropped the speed of delivery as an indicator of the innovation fund. We also pivoted from a focus on individual innovators and her ideas to investing in venture teams that bring diverse capabilities to the table including the abilities to: navigate power and politics, market the concept and to raise follow-on resources, and operational dexterity to articulate a business model to deliver the solution at scale.

From the get-go we agreed not to report vanity indicators. This includes the number of proposals received or ideas proposed at a given co-design event; the number of staff members and partners participating in innovation activities including trainings; the number of toolkits developed or the number of innovation blog posts published. (We source qualitative data to track how blog posts from entrepreneurial offices inspired new work tracks and the adaptation of innovation in other offices. We have yet to explore social network analysis tools to better understand the dynamics in our peer-learning and peer-inspiration networks.)

From day one, we realized that there is no universal set of metrics that is applicable to measure the impact of innovation investments across UNDP’s thematic areas. A key metric used by many actors in the global development innovation ecosystem is: actual/projected number of lives saved and improved. In our case, this would capture only a part of impact. For instance, in 2014 UNDP Georgia supported the Government to redesign the national emergency services to make them accessible for people with hearing and seeing disabilities. Our office led a co-design process, bringing together people with these disabilities, human rights activists, public service providers and Government decision-makers. User-centric methods helped to identify pain-points in the existing service and led to testable prototypes. Fast-forward one year and the emergency services were redesigned on a national scale and inclusively accessible. This led to the joint launch of Georgia’s Service Lab, a public innovation lab situated within the Ministry of Justice which has contributed to the redesign of numerous public services and the establishment of digital service centres throughout the country. The Service Lab operates based on a number of innovation principles, quite similar to the Principles for Development Innovation UNDP endorsed together with UNHCR among other actors. The principles of the Service Lab have been further endorsed and put into action by other line Ministries in the country, resulting in a profound change of how the Government operates and engages with its citizens.

Merely counting the number of people directly affected by the redesigned emergency service would not reflect the full range of impact of this innovation investment as it contributed to an incremental, yet significant institutional change. Therefore, an additional indicator would be key to further track impact: the potential to influence policy/systems change.

Therefore, we agree with each office that receives contributions from the innovation fund on the metrics used to measure the development impact of the respective initiative. To track if the innovation is on a pathway to scale we request data on whether the seed funding from the Facility has unlocked additional funding or co-investments from third parties, as well as created new partnerships. We also inquire about the: inclusivity of the process, cost-effectiveness and time efficiency of the new way of working to reach targeted individuals and households, particularly at the base-of-the-pyramid. This data is self-reported by UNDP Country Offices.

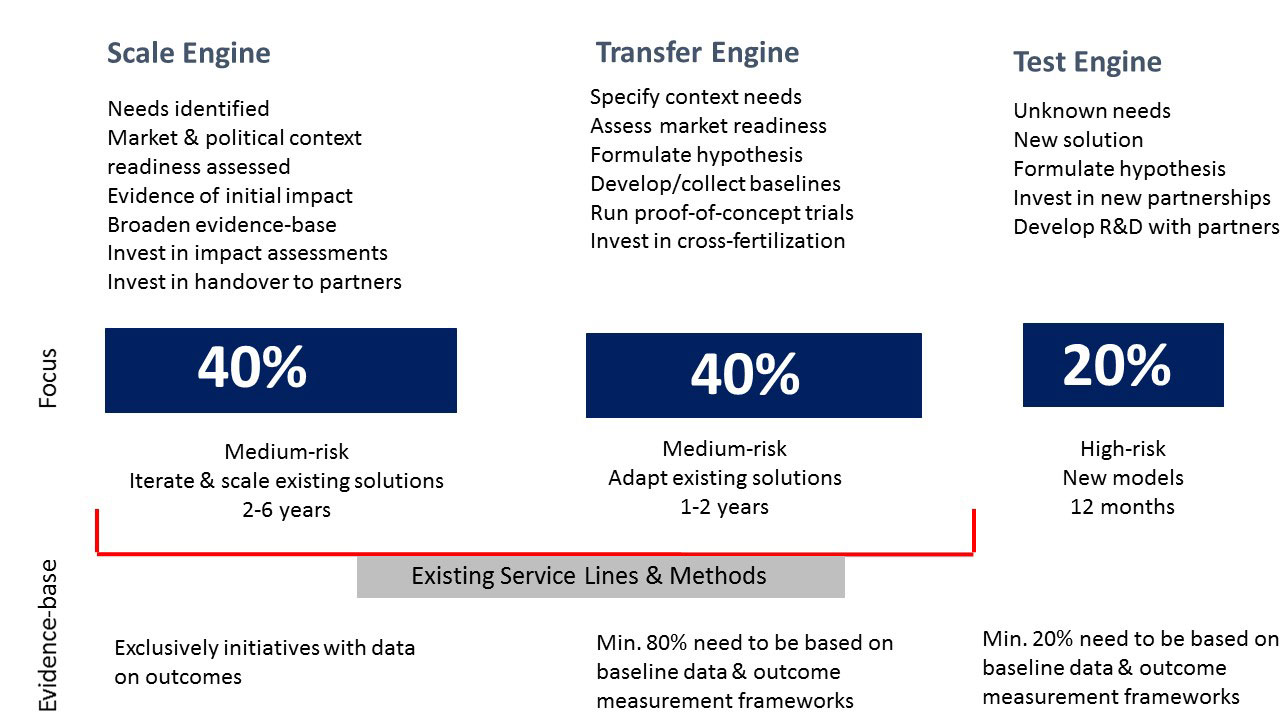

To ensure the portfolio of the innovation fund invests sufficiently in scaling initiatives with initial evidence for success, we established a framework on the stages of scaling with partners from the International Development Innovation Alliance which is specified in our joint publication ‘Measuring the impact of Innovation’. In our daily operations we break these stages down in proof-of-concept, early-stage and pathway to scale. This framework highlights how we split our investments across these three dimensions until 2017.

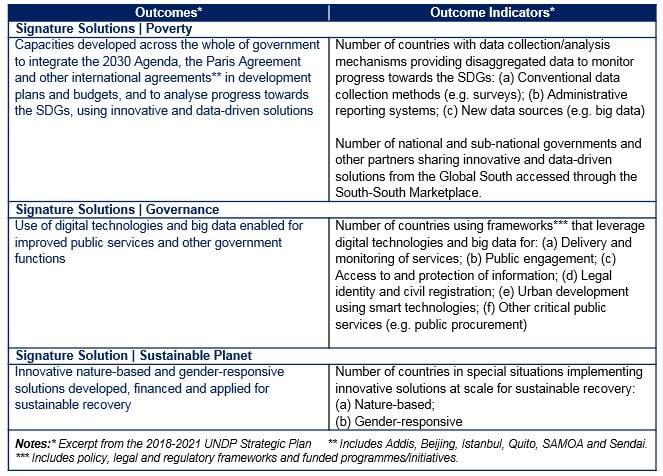

This framework and many of the indicators applied to-date work well as proxy metrics to reveal the number of novel solutions being tested and scaled by UNDP. But just like UNHCR’s Innovation Team pointed out they optimize for incremental innovations that have to come to fruition in relatively short time periods. Our team contributes to UNDP’s corporate transformative efforts. We map UNDP’s innovation journey and the value proposition of the Facility by analyzing the input in the Results-Oriented Annual Report (ROAR) from 135 Country Offices. The Facility further reports in the Integrated Resources and Results Framework (IRRF) of UNDP’s Strategic Plan. Both the survey and the framework track the progress of the UNDP Strategic Plan, which annually produce data on: the percentage of ongoing project outputs that (a) pilot and (b) scale innovative tools and methodologies. This indicator is strategically among the set of tier 3 indicators that track organizational effectiveness and efficiency. While innovation can help accelerate the achievement of development outcomes at scale across the Strategic Plan, from clean energy for all and leveraging private finance to fairer elections, there are 4 more tier 2 indicators that specifically track the uptake and impact of innovative approaches. (Take a look at the table on the bottom of the page for more details on these indicators.)

This led us to realize:

- Our metrics do not reflect some of our key goals

The corporate metrics on innovation capture only a part of the ambition of the Strategic Plan. This Plan actually puts a significant emphasis on innovation and underlines the need for innovation to transform systems. It specifically mentions systems-thinking skills as essential for UNDP and mandates that UNDP’s Signature Solutions be delivered through a set of Country Platforms, along with a Global Support Platform. Innovation is a key component of how these platforms and approaches need to go beyond incrementalism – a clear request from UNDP Administrator Achim Steiner.

By measuring the percentage of ‘innovative tools and methodologies that are being piloted or scaled’, there is a misalignment to the goal of eventually mainstreaming these tools and methodologies to (a) accelerate the achievement of the SDGs; and (b) impact the lives of people at scale, for the better. Also, to-date the metrics of the innovation fund do not reflect the breadth of our strategic goals. Neither are there indicators on moving the organization to a state of permanent beta nor on initiating systems-change processes that require a multitude of interventions over a prolonged period of time.

- Our goals need to be further refined and move beyond incremental innovation

Innovation for us goes beyond gizmos and gadgets. It serves as a driver to do development differently and better. This means, on the one hand, to hold development innovators to greater account than traditional development interventions. Innovation must prove its comparative advantage to the status quo and it must also monitor for unintended consequences. So we need to improve how we define success as well as unintended consequences for the innovations from early through scaling phases. We also need to further specify what success looks like regarding change management: what does it mean exactly to create a culture change or an enabling environment? What would be incentives for innovation that are comparatively stronger than behavioural drivers (which are often implicit and linked to traditional ways of career progression)?

These concepts need to move from vague aspiration to measurable missions. This includes dedicated work on inspiring radical hope and radical changes in the mindsets of development practitioners. “To think that changing the world is possible without changing our mental models is folly”, as Joseph Jaworski puts it.

So here are three things we plan on doing differently:

- Specify change management goals and measure mainstreaming and maturing service lines

We will test metrics that monitor the institutionalization of innovation. For example, if we fully mainstream the collection of near real-time data through satellites and drones, and its analysis for improved decision-making, then this should not be counted in our corporate results framework as an ‘innovative approach’ any longer. We will also have to test the degree to which such systems can be gamed and create adverse effects as ‘ticking the box’ exercises. We see emerging models of mainstreaming in our Country Offices. In Rwanda for example, the UNDP office just institutionalized a mandatory budget line for all new projects to ensure investments in iterative design and qualitative inquiry to specify the problem statements. Yet, no global metric captures such mainstreaming success.

With Government partners, business, academia, and young engineers, UNDP Rwanda is testing the effectiveness of IoT technologies to improve climate resilience. How can investments in one innovation contribute to positive shifts in a larger system?

Our goal is to deliver on the SDGs, while leaving no one behind. This translates to the SDGs being the very outcome targets and indicators we aspire to meet. Our hypothesis, is that combining systems-thinking with ethically designed experiments can help us accelerate progress across the Goals in a more integrated way.

Here are a few input indicators we will test to mainstream innovation:

- Percentage of Country Offices and thematic teams that institutionalize foresight and horizon scanning functions

- Number of operational policies, partnership and financial vehicles launched based on a) demand from Country Offices; and/or b) results from country-level experiments

- Number of mature service lines available to deliver to partners, with the required infrastructure in place: internal capabilities, partnership agreements, policies (esp. on ethics, transparency and security), tools and methods

- Percentage of (senior) managers who have a dedicated goal in their annual performance assessment system that is predicated upon ‘risk expectation’ and which outlines a highly ambitious experiment

In addition, we are working on finding ways to measure to what degree we have de-risked ideas? How can we measure learning velocity and answer questions such as: How have the new skills lead to value added for UNDP and its clients?

- Test right-fit monitoring models

To-date, the data we receive on the comparative advantage and impact is primarily self-reported data from UNDP offices. Over the years, we increased the percentage of grantees that design more rigorous mechanisms such as randomized control trials (RCTs), quasi-experimental methods as well as qualitative inquiry through micro-narratives. We are working on building the institutional infrastructure for RCTs. However, RCTs and other rigorous impact measurement methods are expensive and not always the right thing to do.

Moving forward, we will test the applicability of M&E models to gain (near) real-time actionable insights to help learn and iterate quickly. This includes testing IPA’s Goldilocks Toolkit and the Lean Data model, designed by the Acumen’s investment team.

Also, most data collected describe “backward-looking impact” or near-real time impact. This very same data can be harnessed to enable forward-looking learning. We will test simulation and modelling approaches to be more responsive to emerging needs, more cost-effective in meeting those needs, and future-ready by building in for the necessary evolution of community needs. This requires changes to data protocols and processes within the organization and in our collaboration with partners.

- Develop metrics to measure the contributions of innovation activities for systems-transformation and new development paradigms

For us, innovation means to combine systems-thinking with ethically designed experiments to achieve the ambitious 2030 Agenda. One pathway to translate this into action is to formulate ambitious, yet measurable missions derived from the SDGs such as carbon-neutral cities by 2030. Such missions need to be specific, time-bound, cross-sectoral and cross-disciplinary and entails a portfolio of competing parallel solutions.

In light of the unprecedented pace of technological progress and existential crisis for our planet, there is a clear need to radically challenge dominant paradigms of how societies measure value creation, human fulfillment, freedom or happiness. How can modest investments advance emerging concepts such as societal innovations, yet measure the impact of its contributions? Indy Johar describes it as “a class of innovation, which functions in the interest of public good (as opposed to the community good) at a societal level and is essential in driving the development of cities. It is not limited to collective self- interest of a single community, but includes the interests of those who have not yet been born or arrived or are beyond the boundaries of one specific community.” How do we design metrics that take future liabilities adequately into account, yet capture even small steps towards progress? A number of the incremental innovations we support have the potential to contribute to systems-change, yet we cannot claim causality as one actor in a complex system. Mechanisms to contribute to systems-change include sense-making, network building across sectors, experiments, co-creating movements and political bases for policy changes.

Moving forward, we will revise the investment framework to reflect dedicated budget lines for incremental innovation, for transformative innovation / systems-change, for mainstreaming innovation and for testing new business models. Knowing that there is no golden rule to split innovation portfolio investments along core, adjacent and transformational initiatives, we will start with an increased envelope for transformational innovation for around 30%.

A specific window will be earmarked for innovations that target previously unreached individuals and households at the base of the pyramid. We will assess opportunities to support fewer incremental innovations and cluster them in selected countries around clearly defined missions.

Our journey ahead mirrors the one by our friends at UNHCR Innovation. We could not put it in better terms: “We’d like to make mission-oriented investments combined with patient, long-term strategic finance while maintaining a sector-agnostic portfolio. We believe this is important because our mission and goals will address challenges that cross-sectoral boundaries, since one sector alone cannot solve the political, economic and environmental challenges.” If you work on designing metrics and measurement frameworks that have delivered systemic and system-wide changes on these questions, please get in touch.

To learn more about UNDP’s work on innovation, you can follow Ben here, Malika here and the main the UNDP Innovation Facility on Twitter.

Annex: