PLAN - Section 6: Monitoring and Evaluation Plan

Overview

Monitoring and evaluation (M&E) generates evidence for strategic planning, decision-making, advocacy, communication, and learning. This section aims to guide the development of the M&E plan. Planning for M&E is essential to ensure that processes and roles are clear within an operation and that all necessary activities are documented, costed, and aligned with strategic goals.

In a nutshell

- Operations develop an M&E plan to guide data collection, collation, and analysis throughout the strategy.

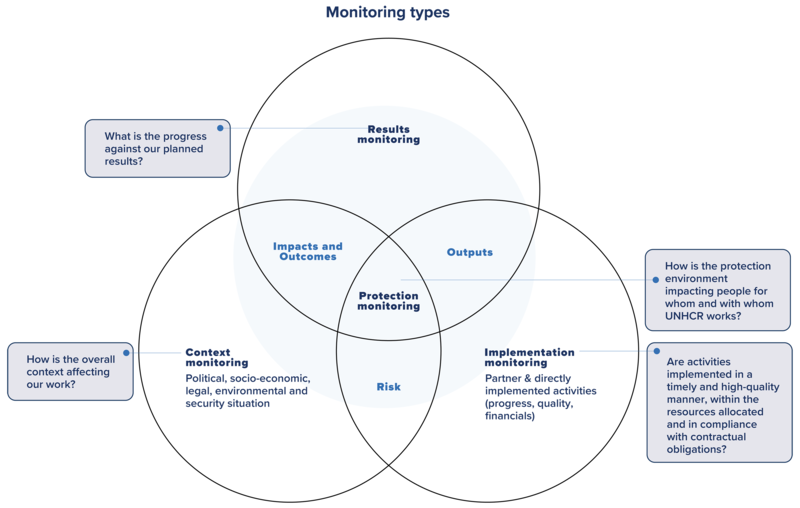

- The M&E plan consists of three components: means of verification (MoV) for results indicators, the assessment, monitoring and evaluation (AME) workplan, and M&E priorities.

- Operations use MoV to document how they will obtain data for each results indicator. MoV are defined in the PLAN for Results phase and last for the duration of the strategy.

- They use the AME workplan to map out planned activities related to assessment, monitoring, and evaluation, along with their costs. The AME workplan is first developed during the PLAN for Results phase and refined annually.

- Operations identify and summarize the three to five most important M&E-related activities over the course of the strategy. These M&E priorities are defined in the PLAN for Results phase and last for the duration of the strategy.

Monitoring and evaluation (M&E) is a central part of results-based management. It provides timely, reliable and relevant evidence to inform strategic planning, guide strategic reorientation, and identify adjustments during implementation. It also supports decision-making and accountability, enhances credibility and learning, and aids advocacy, resource mobilization and risk management.

Operations develop an M&E plan to identify how to obtain the necessary evidence for monitoring and evaluating their strategy.

Monitoring

Monitoring is the routine gathering, analysis and use of information to understand how well UNHCR is implementing its interventions, achieving its planned results, and understanding any changes in the operating and protection environment and how they may affect forcibly displaced and stateless people, as well as UNHCR’s work.

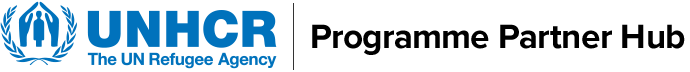

UNHCR distinguishes between four types of monitoring: results monitoring, implementation monitoring, protection monitoring and context monitoring.

Results monitoring

Results monitoring is what UNHCR does to gather evidence of progress towards planned results. This involves reviewing the strategy’s outputs, outcomes, and impacts, monitoring the related indicators in the operation’s results framework, and reviewing community feedback and resources. Results monitoring informs course correction and helps determine necessary adjustments. See PLAN – Section 5 for more information on each results level.

Results monitoring can also involve monitoring indicators in other frameworks that UNHCR is obliged to report against. Where possible, operations are encouraged to streamline any additional indicator reporting requirements into the operation’s results framework. Examples include monitoring requirements for Refugee Response Plans (RRPs), Humanitarian Response Plans (HPRs), and the United Nations Sustainable Development Cooperation Framework (UNSDCF). See PLAN – Section 5 for more information.

Results managers lead results monitoring activities, supported by information management and M&E personnel. More information on results monitoring can be found in GET – Section 3.

Implementation monitoring

Implementation monitoring is what UNHCR does to understand how well the implementation of projects by funded partners and UNHCR [with a direct implementation (DI) budget] is progressing. Implementation monitoring involves:

- Monitoring the progress of projects.

- Assessing the availability and quality of services and/or delivered goods.

- Analysing financial trends against the required and available budget.

- Verifying the progress of funded partners.

- Monitoring the compliance of funded partners.

- Monitoring the compliance of contractors or vendors with their contractual obligations.

Implementation monitoring supports output results monitoring. An operation may need to combine data shared by several funded partners, as well as data from monitoring of projects implemented with a direct implementation budget, to be able to calculate the value for a single output indicator.

Results managers lead implementation monitoring activities and are supported by information management and M&E personnel. Programme and project control lead verification activities, financial analysis and compliance. More information on implementation monitoring can be found in GET – Section 4.

Protection monitoring

Protection monitoring is what UNHCR does to obtain essential information about the impact of the protection environment on the people UNHCR works with and for. It generates information about the protection environment, which can include risks and threats, incidents of rights violations, resulting needs, impact on access to services, reported priorities, and more. Depending on the operational context and need, protection monitoring can be focused on themes, incidents, populations, and phases of displacement. Protection monitoring can also provide evidence for specific results indicators and help to contextualize and analyse results monitoring data.

Protection colleagues lead protection monitoring activities, supported by information management and M&E personnel.

Context monitoring

Context monitoring is what UNHCR does to identify developments that may affect the situation of forcibly displaced and stateless people and UNHCR’s work. Context monitoring involves staying up to date with political, security, socio-economic, legal, environmental and policy developments, as well as donor interests. Operations do this through media monitoring, discussing with governments, donors, and other stakeholders, consulting with forcibly displaced and stateless people of different ages, genders and other diversity characteristics, and observing developments or risks on the ground.

Context monitoring findings help operations to understand the progress of risks and assumptions outlined in their theory of change, guiding their programming decisions and adjustments. They also support internal planning during the situation analysis stage as well as inter-agency planning processes. Finally, context monitoring helps to situate and explain findings from the other three types of monitoring outlined above. All personnel in an operation engage in context monitoring.

💡 KEEP IN MIND Context, protection, and implementation monitoring contribute to results monitoring.

|

Evaluation

An evaluation is an independent, impartial, and systematic assessment of an activity, project, programme, strategy, policy, topic, theme, sector, operational area, or institutional performance.

The main purpose of an evaluation is to facilitate learning and accountability, inform policy decisions and advocacy and guide strategic and programmatic choices. This helps generate knowledge and enables UNHCR to build on successes and highlight good practices.

The Evaluation Office is responsible for the decision to commission an independent strategic evaluation at the global level. The Head of the Evaluation Office consults with the Senior Executive Team (SET), senior management in bureaux and headquarters divisions and other independent oversight providers to identify areas for evaluation. The selection of what to evaluate may be informed by:

- Requirements in UNHCR policy and strategy documents.

- The need to develop and implement global policies, strategies, and operational engagements, with evaluations timed to ensure their design or revision.

- The need to measure UNHCR’s contribution to collective efforts.

- Demand from stakeholders.

Division directors or heads of entity determine the need for management-commissioned divisional or sector-specific evaluations or evaluations of global programmes and are responsible for commissioning, managing and carrying them. Findings from these evaluations can inform regional and country multi-year plans, scale up innovation, and ensure comprehensive coverage of programmatic areas.

Factors influencing management-commissioned divisional or sector-specific evaluations or evaluations of global programmes include:

- The use of partnership, advocacy or programmatic approaches and interventions across multiple countries, which presents an opportunity to conduct a multi-country evaluation on a particular theme to enable cross-learning and reduce evaluation costs.

- Compliance with provisions in grant agreements or donor contribution agreements.

- Joint approaches with UN organizations and partners.

- The need for new evidence and notable changes in regional contexts.

The representative and bureau director consult and decide on evaluations at the country or regional level, considering the following factors:

- The need for objective evidence to inform the preparation and review of multi-year strategic plans and assess the outcomes and scalability of innovative programmes.

- Compliance with provisions in grant agreements or donor contribution agreements.

- Joint evaluation initiatives with UN organizations and partners, including governments.

- Evidence needs specific to the country context and changes in the operational environment. Results from these scenarios can inform multi-year country plans, facilitate scaling up of innovations and ensure comprehensive coverage of programmatic areas.

Typology of evaluations

| Level | Type | Commissioning unit | Frequency | Accountability for management response |

| Global – independent | Corporate policy, strategy, thematic | Evaluation Office | At least once in 10 years. | SET member |

| Emergency response | Evaluation Office | All L3 emergencies within 15 months of declaration. L2 emergencies at the request of the SET or bureaux. | Assistant High Commissioner for Operations | |

| Global – commissioned by management | Thematic or programme- specific evaluations | Division or entity | Coverage and frequency determined by the commissioning unit. | Division director or head of entity |

| Regional | Multi-country, thematic or programmatic evaluations | Bureau | Coverage and frequency determined by the commissioning unit. | Bureau director |

| Country | Country strategy evaluations | Bureau | All operations to be subject to some form of evaluation during a multi-year strategy cycle or at least once every five years. This coverage norm to be phased in over the life of the Evaluation Policy. | Representative and bureau director |

| Thematic, programme- and project-level evaluations | Country or multi-country office | Representative |

In accordance with the Evaluation Policy, country operations are required to commission at least one evaluation per multi-year strategy or at least once every five years, while bureaux and headquarters divisions and entities are strongly encouraged to do the same. Operations are encouraged to plan an evaluation when there is an opportunity to adjust strategies and programming and to make changes to an ongoing intervention, or to refocus a strategy and approach to improve results for affected communities. They also ensure planning and resourcing if donor agreements require mandatory project evaluations.

An evaluation typically takes 6-12 months to complete. Therefore, operations are encouraged to plan ahead, especially if the evaluation will inform strategic changes in the next multi-year cycle.

Budgeting for evaluations is vital to allocate resources effectively. The Evaluation Office funds global evaluations related to policies, thematic areas and strategies, while bureaux fund multi-country or regional evaluations related to policies, thematic areas, strategies, programmes and projects. Country operations are responsible for funding thematic, programme, and project evaluations at the country level.

At least one evaluation is identified and recorded as an M&E priority by country operations in the multi-year M&E plan. It is important to budget for any evaluation costs in the “Resource Management” module of the strategy.

Linkages between monitoring and evaluation

Quality monitoring is essential for conducting meaningful evaluations because:

- Monitoring may identify issues that require deeper review through an evaluation and therefore helps ensure that an evaluation focuses on the right questions.

- Monitoring can provide valuable information that helps contextualize the findings of an evaluation.

Quality evaluations can, in turn, inform future monitoring activities by:

- Highlighting specific areas of a programme that require closer or more frequent monitoring.

- Providing insights on how UNHCR can improve its approach to monitoring, such as developing new tools or improving the quality of data collected.

Both monitoring and evaluation demonstrate our accountability, support evidence-informed decision-making, and help identify good practices and learning. However, there are also differences between the two, which are outlined in the table below.

| Differences between monitoring and evaluation | |

| Monitoring | Evaluation |

| Continuous – carried out throughout the strategy implementation. | Periodic – at least one evaluation during a multi-year strategy cycle or at least once every five years. |

| Carried out by UNHCR personnel or partners (or a third-party monitoring entity). | Carried out by independent evaluators. |

| Some data is reported in the public domain. | All reports are shared in the public domain. |

| Helps UNHCR understand the evolving context as well as progress towards results. | Helps UNHCR understand why results are or are not being achieved. |

| Can demonstrate how well interventions are being implemented in line with what was planned in the strategy. | Demonstrates whether we are focusing on the right interventions at the right scale and identifies any unintended effects of those interventions. |

The M&E plan guides data collection and analysis throughout the strategy to measure progress towards planned results. Work on the M&E plan begins when indicators are defined for the multi-year results framework.

The M&E plan consists of three components:

- Means of Verification (MoV): Operations use the MoV to document how they will obtain data for each results indicator. MoV are defined in the PLAN for Results phase, and last for the duration of the strategy.

- Assessment, monitoring and evaluation (AME) workplan: Operations use the AME workplan to map out planned activities related to assessment, monitoring and evaluation, along with their costs. The AME workplan is developed during the PLAN for Results phase and refined annually during annual implementation planning. It is a living document that operations continuously update throughout the year.

- M&E priorities: Operations use the M&E priorities to reflect the three to five most important M&E-related activities over the course of the strategy. M&E priorities are defined in the PLAN for Results phase and last for the duration of the strategy.

Define the planned means of verification (MoV) for each indicator

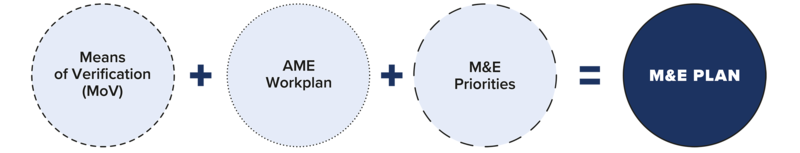

The planned MoV outline how operations obtain data for each results indicator. Defining MoV for an indicator involves deciding on the data source, monitoring activity, data collection frequency, and internal and external responsibility.

Results managers are responsible for defining the planned MoV for each results indicator, which then apply for the duration of the strategy and are only revised if there is a permanent change in how the data will be obtained. For example, if an operation initially plans to conduct a survey to obtain data for several indicators but later finds that the data will be available from a government survey, it may update the planned MoV to reflect this.

MoV components

Operations may work on the MoV offline to facilitate collaboration before they document them in the MoV planning table in COMPASS.

Results managers are responsible for defining the planned MoV for indicators under their area(s) of responsibility. They are supported by information management and M&E personnel.

Step 1: Identify the data sources for each indicator

Data sources refer to the origins or providers of indicator data, i.e., from where or from whom the indicator data is obtained. Results managers determine data sources, and consider:

- UNHCR datasets stored on the Raw Internal Data Library (RIDL) from recent data collection exercises.

- Data from PRIMES applications (including case management or registration data), or data from sectoral monitoring systems.

- Secondary data from partners, the government, or other stakeholders.

Results managers assess the credibility, relevance, and reliability of the planned data source and record it in the MoV by selecting the most appropriate option from a pre-defined list:

- Government data

- ProGres/PRIMES

- UNHCR

- Funded partners

- Non-funded partners

- Other

It is possible to select two data sources per indicator. For example, a proportion indicator may require a different data source for the numerator and denominator. In such cases, operations use the “data source comments” field to explain.

Step 2: Define monitoring activities for each indicator

Monitoring activities refer to what UNHCR does to obtain indicator data. This is different from the monitoring activities partners might carry out. The choice of monitoring activity depends on the specific requirements of each indicator and the operational context.

To measure impact and outcome results, operations may conduct document analysis, i.e., they review national-level survey reports that include data on forcibly displaced and stateless people, government administrative data, or legal documents. They may also carry out monitoring system management, i.e., obtain results data from UNHCR systems, such as proGres, where the data is representative of the population of interest. Depending on the nature of the indicator, qualitative monitoring activities may provide additional insights.

For output-level results, operations may also conduct document analysis, which can entail a review of UNHCR field records, distribution lists, or reports from funded partners. Where data is sourced from UNHCR systems, such as proGres, or sectoral monitoring systems that cover the population assisted by UNHCR and funded partners, they may carry out monitoring system management. Operations may conduct qualitative monitoring activities or protection monitoring activities, for instance, through direct observation, key informant interviews or focus group discussions. Sometimes, data from various sources need to be combined to calculate output indicator values, such as data from UNHCR field records and data from funded partners, which may be available in tools like ActivityInfo. Care should be taken to avoid double counting. See GET - Section 1 for more information on activity tracking.

Results managers record the planned monitoring activity in the MoV by selecting the most appropriate option from a pre-defined list:

- Survey

- Document analysis

- Monitoring system management

- Protection monitoring

- Qualitative monitoring activity

- Other

Operations can add details to the planned monitoring activity using the “comments” field in the MoV. For example, if they are planning a survey, they may indicate in the comments "RMS integrated with socio-economic insights survey".

It is important to select a meaningful combination of data sources and monitoring activities in the MoV. Here are a few examples:

- For an indicator that requires legal analysis of nationality law frameworks, “document analysis” would be an appropriate monitoring activity in combination with the data source “government data”. It would not be appropriate to source such data from “qualitative monitoring activities”, such as focus group discussions, or from “surveys”.

- For an impact-level indicator that requires representative data on the living situation of forcibly displaced and stateless people, a “survey” might be the appropriate monitoring activity, together with the data source “UNHCR” (if UNHCR is conducting the survey).

- For an output-level indicator that requires a review of distribution lists to understand how many people have benefitted from a service or product delivered by UNHCR, “document analysis” would be an appropriate monitoring activity in combination with the data source “UNHCR”.

Results managers combine data sources and monitoring activities based on the options in the table below.

Possible combinations of data sources and their associated monitoring activities

| Data source | Possible monitoring activity |

| Government data |

|

| ProGres / PRIMES |

|

| UNHCR |

|

| Funded partner |

|

| Non-funded partner |

|

| Other |

|

Step 3: Decide on data collection frequency

Data collection frequency refers to how often data is gathered. This is different from the reporting frequency, i.e., how often data is reported in COMPASS. The frequency of data collection depends on the nature of the indicator, the data source and the level of change that the indicator measures. Data for some survey-based indicators may be collected only once every few years, but other data is collected continuously, such as the number of asylum applications or cash assistance delivery. Operations ensure that the frequency of data collection is based on the availability and usefulness of the data, rather than the “ideal” frequency with which the operation would like to have the data.

Step 4: Select responsibility

Responsibility indicates who is responsible for obtaining the data. There are two types of responsibility:

- Internal responsibility: This refers to the results manager responsible for each indicator, such as the senior protection officer.

- External responsibility: This identifies any external party involved in providing the data. For example, if the government is the source of the data, the results manager will note the exact ministry or the title of the focal point who will provide it.

Even where an external party provides the data, the UNHCR results manager remains accountable for ensuring that data for the indicator is available. The results manager is therefore still reflected under “internal responsibility”.

Review the M&E plan

Once the M&E plan is finalized, the planning coordinator, with support from M&E personnel, leads a review of the plan with the MFT to ensure its quality and coherence. Key considerations during this review include:

- Are the MoV complete and logical for each indicator? For example, have results managers followed the indicator guidance to identify the appropriate and feasible combinations of data source(s) and monitoring activities?

- Is it clear who is responsible for each indicator in the MoV?

- Is the AME workplan complete with all planned activities and their costs? Is it clear who will carry out these activities in the AME workplan?

- Is there any duplication of activities in the AME workplan?

- Have all costs been included in the budget in the “Resource Management” module?

- Do the M&E priorities address the most strategically important actions needed?

- Is the overall M&E plan realistic and achievable?

The bureau reviews the M&E plans of the operations in their area of responsibility, including the AME workplan, and provides support to refine them. Operations can discuss any identified support needs with their bureau throughout the strategy, based on the activities in the AME workplan.